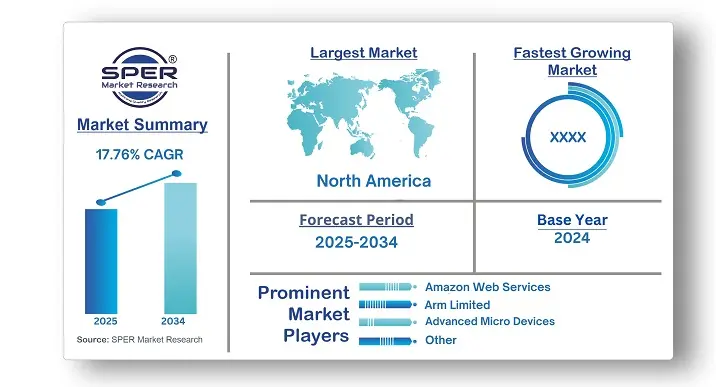

AI Inference Market Size, Share Trends and Forecast 2034

AI Inference Market Growth, Size, Trends Analysis - By Memory, By Compute, By Application, By End-User - Regional Outlook, Competitive Strategies and Segment Forecast to 2034

| Published: Sep-2025 | Report ID: IACT25166 | Pages: 1 - 235 | Formats*: |

| Category : Information & Communications Technology | |||

| Report Metric | Details |

| Market size available for years | 2021-2034 |

| Base year considered | 2024 |

| Forecast period | 2025-2034 |

| Segments covered | By Memory, By Compute, By Application, By End-User |

| Regions covered | North America, Latin America, Asia-Pacific, Europe, and Middle East & Africa |

| Companies Covered | Amazon Web Services, Inc, Arm Limited, Advanced Micro Devices, Inc, Google LLC, Intel Corporation, Microsoft, Mythic, NVIDIA Corporation, Qualcomm Technologies, Inc. |

- Global AI Inference Market Size (FY 2021-FY 2034)

- Overview of Global AI Inference Market

- Segmentation of Global AI Inference Market By Memory (HBM, DDR)

- Segmentation of Global AI Inference Market By Compute (GPU, CPU, FPGA, NPU, Others)

- Segmentation of Global AI Inference Market By Application (Generative AI, Machine Learning, Natural Language Processing, Computer Vision, Others)

- Segmentation of Global AI Inference Market By End User (BFSI, Healthcare, Retail and E-commerce, Automotive, IT and Telecommunications, Manufacturing, Security, Others)

- Statistical Snap of Global AI Inference Market

- Expansion Analysis of Global AI Inference Market

- Problems and Obstacles in Global AI Inference Market

- Competitive Landscape in the Global AI Inference Market

- Details on Current Investment in Global AI Inference Market

- Competitive Analysis of Global AI Inference Market

- Prominent Players in the Global AI Inference Market

- SWOT Analysis of Global AI Inference Market

- Global AI Inference Market Future Outlook and Projections (FY 2025-FY 2034)

- Recommendations from Analyst

- 1.1. Scope of the report

- 1.2. Market segment analysis

- 2.1. Research data source

- 2.1.1. Secondary Data

- 2.1.2. Primary Data

- 2.1.3. SPERs internal database

- 2.1.4. Premium insight from KOLs

- 2.2. Market size estimation

- 2.2.1. Top-down and Bottom-up approach

- 2.3. Data triangulation

- 4.1. Driver, Restraint, Opportunity and Challenges analysis

- 4.1.1. Drivers

- 4.1.2. Restraints

- 4.1.3. Opportunities

- 4.1.4. Challenges

- 5.1. SWOT Analysis

- 5.1.1. Strengths

- 5.1.2. Weaknesses

- 5.1.3. Opportunities

- 5.1.4. Threats

- 5.2. PESTEL Analysis

- 5.2.1. Political Landscape

- 5.2.2. Economic Landscape

- 5.2.3. Social Landscape

- 5.2.4. Technological Landscape

- 5.2.5. Environmental Landscape

- 5.2.6. Legal Landscape

- 5.3. PORTERs Five Forces

- 5.3.1. Bargaining power of suppliers

- 5.3.2. Bargaining power of buyers

- 5.3.3. Threat of Substitute

- 5.3.4. Threat of new entrant

- 5.3.5. Competitive rivalry

- 5.4. Heat Map Analysis

- 6.1. Global AI Inference Market Manufacturing Base Distribution, Sales Area, Product Type

- 6.2. Mergers & Acquisitions, Partnerships, Product Launch, and Collaboration in Global AI Inference Market

- 7.1. HBM (High Bandwidth Memory)

- 7.2. DDR (Double Data Rate)

- 8.1. GPU

- 8.2. CPU

- 8.3. FPGA

- 8.4. NPU

- 8.5. Others

- 9.1. Generative AI

- 9.2. Machine Learning

- 9.3. Natural Language Processing (NLP)

- 9.4. Computer Vision

- 9.5. Others

- 10.1. BFSI

- 10.2. Healthcare

- 10.3. Retail and E-commerce

- 10.4. Automotive

- 10.5. IT and Telecommunications

- 10.6. Manufacturing

- 10.7. Security

- 10.8. Others

- 11.1. Global AI Inference Market Size and Market Share

- 12.1. Asia-Pacific

- 12.1.1. Australia

- 12.1.2. China

- 12.1.3. India

- 12.1.4. Japan

- 12.1.5. South Korea

- 12.1.6. Rest of Asia-Pacific

- 12.2. Europe

- 12.2.1. France

- 12.2.2. Germany

- 12.2.3. Italy

- 12.2.4. Spain

- 12.2.5. United Kingdom

- 12.2.6. Rest of Europe

- 12.3. Middle East and Africa

- 12.3.1. Kingdom of Saudi Arabia

- 12.3.2. United Arab Emirates

- 12.3.3. Qatar

- 12.3.4. South Africa

- 12.3.5. Egypt

- 12.3.6. Morocco

- 12.3.7. Nigeria

- 12.3.8. Rest of Middle-East and Africa

- 12.4. North America

- 12.4.1. Canada

- 12.4.2. Mexico

- 12.4.3. United States

- 12.5. Latin America

- 12.5.1. Argentina

- 12.5.2. Brazil

- 12.5.3. Rest of Latin America

- 13.1. Amazon Web Services, Inc

- 13.1.1. Company details

- 13.1.2. Financial outlook

- 13.1.3. Product summary

- 13.1.4. Recent developments

- 13.2. Arm Limited

- 13.2.1. Company details

- 13.2.2. Financial outlook

- 13.2.3. Product summary

- 13.2.4. Recent developments

- 13.3. Advanced Micro Devices, Inc

- 13.3.1. Company details

- 13.3.2. Financial outlook

- 13.3.3. Product summary

- 13.3.4. Recent developments

- 13.4. Google LLC

- 13.4.1. Company details

- 13.4.2. Financial outlook

- 13.4.3. Product summary

- 13.4.4. Recent developments

- 13.5. Intel Corporation

- 13.5.1. Company details

- 13.5.2. Financial outlook

- 13.5.3. Product summary

- 13.5.4. Recent developments

- 13.6. Microsoft

- 13.6.1. Company details

- 13.6.2. Financial outlook

- 13.6.3. Product summary

- 13.6.4. Recent developments

- 13.7. Mythic

- 13.7.1. Company details

- 13.7.2. Financial outlook

- 13.7.3. Product summary

- 13.7.4. Recent developments

- 13.8. NVIDIA Corporation

- 13.8.1. Company details

- 13.8.2. Financial outlook

- 13.8.3. Product summary

- 13.8.4. Recent developments

- 13.9. Qualcomm Technologies, Inc

- 13.9.1. Company details

- 13.9.2. Financial outlook

- 13.9.3. Product summary

- 13.9.4. Recent developments

- 13.10. Sophos Ltd

- 13.10.1. Company details

- 13.10.2. Financial outlook

- 13.10.3. Product summary

- 13.10.4. Recent developments

- 13.11. Others

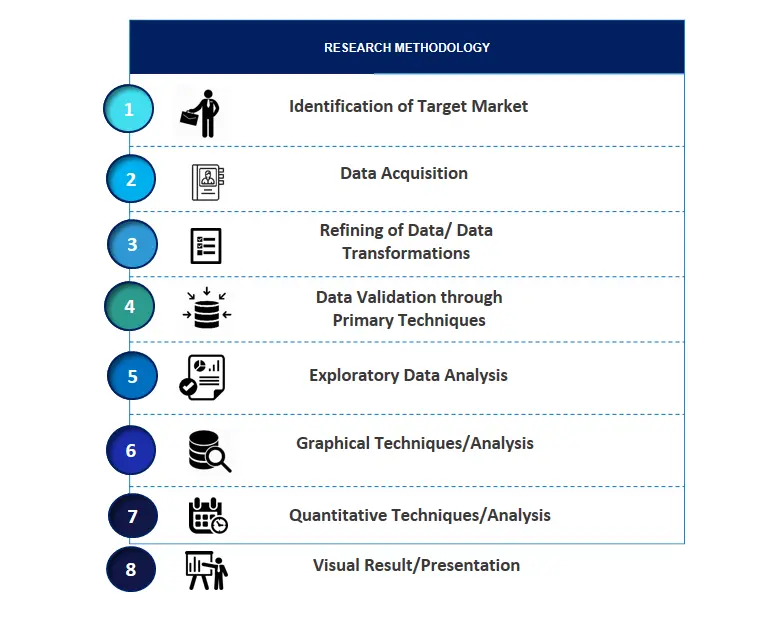

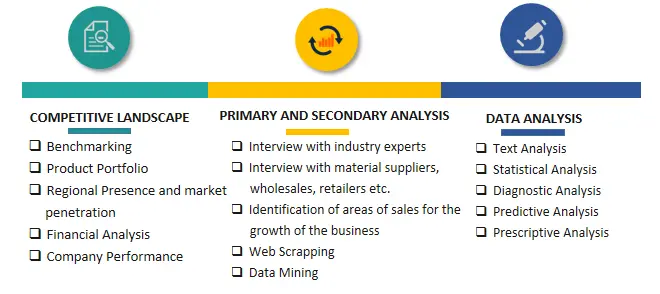

SPER Market Research’s methodology uses great emphasis on primary research to ensure that the market intelligence insights are up to date, reliable and accurate. Primary interviews are done with players involved in each phase of a supply chain to analyze the market forecasting. The secondary research method is used to help you fully understand how the future markets and the spending patterns look likes.

The report is based on in-depth qualitative and quantitative analysis of the Product Market. The quantitative analysis involves the application of various projection and sampling techniques. The qualitative analysis involves primary interviews, surveys, and vendor briefings. The data gathered as a result of these processes are validated through experts opinion. Our research methodology entails an ideal mixture of primary and secondary initiatives.

Frequently Asked Questions About This Report

PLACE AN ORDER

Year End Discount

Sample Report

Pre-Purchase Inquiry

NEED CUSTOMIZATION?

Request CustomizationCALL OR EMAIL US

100% Secure Payment

Related Reports

Our Global Clients

Our data-driven insights have influenced the strategy of 200+ reputed companies across the globe.